Kafka Components:

- Producer

- Publish the message to a topic,

- messages are appended to one of the topic

- Consumer

- Subscriber of the messages from a topic,

one or more consumer can subscriber a topic

- Subscriber can subscribe a topic from

different partition, called consumer group

- Two consumer of the same consumer group CAN

NOT subscribe the messages from the same partition

- Message

- Kafka message consists of an array of

bytes, addition to optional metadata is called Key

- Kafka can write the message in real time

and batch mode

- Batch mode increases the throughput but decreases the latency, hence there is a trade-off between latency and throughput.

- Events

- An event represents a fact that happened in

the past.

- Events are immutable and never stay in one

place.

- They always travel from one system to another system, carrying the state changes that happened.

- Topic groups related events together and durably stores them.

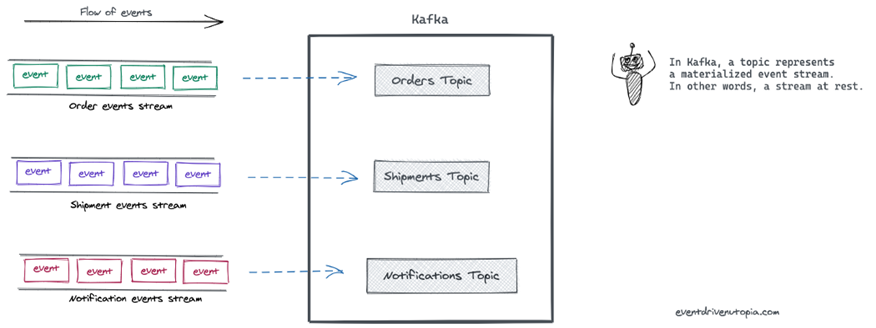

- Streams

- An event stream represents related events

in motion.

- Topic

- topic is a logical concept in Kafka

- Producers published the message to a topic

and consumer consumes the message

- Can be considered like a folder in a file

system

- Each topic can have multiple Partitions and

message stored in Partitions

- Topic is held by broker that stores events

and managed by broker

- a topic is a materialized event stream. In other words, a topic is a

stream at rest

- A topic is a stream of data comprising individual records

- Example:

- Kafka topic is a table in a database or folder in a file system

- Partition

- Kafka’s topics are divided into

several partitions.

- a partition is the smallest storage unit

that holds a subset of records owned by a topic.

- Each partition is a single log file where

records/message are written to it in an append-only fashion.

- Offset

- Each record in a partition is assigned and identified by its unique offset

- a sequence number is assigned to each message in each partition of a Kafka topic, this is called Offset

- As soon as any message arrives in a partition, a number is assigned to that message.

- For a given topic, different partitions have different offsets.

- The offset number is always local to the topic partition.

- Node

- Kafka node host kafka servers (configure

Kafka broker)

- Kafka is a distributed, partitioned and

replicated pub-sub messaging system.

- Kafka stores messages in topics

(partitioned and replicated across multiple brokers).

- Producers send messages to topics where consumers or their consumer groups read

- Broker

- A broker is just an intermediate entity

that exchange message between a producer and a consumer

- For Kafka Producer,

- it acts as a receiver,

- and for Kafka Consumer,

- it acts as a sender.

- Brokers are heart of Kafka body that are

also called the Kafka server.

- Each server node in the cluster is a

“broker,”

- Handles all requests from clients (produce,

consume, and metadata) and keeps data replicated within the cluster.

- There can be one or more brokers in a

cluster.

- A Kafka broker allows consumers to fetch

messages by topic, partition and offset.

- Kafka brokers can create a Kafka cluster by

sharing information between each other directly or indirectly using

Zookeepe

- Cluster

- Kafka cluster consists of one or more

servers (Kafka brokers) running Kafka.

- It is nothing but just a group of

computers that are working for a common purpose

- it also has a cluster having a group of

servers called brokers

- It have combination of Servers which will

have brokers on it, means a server will have brokers, a multiple

servers will have multiple Brokers which will called Cluster

- Producers are processes that push records

into Kafka topics within the broker.

- A consumer pulls records off a Kafka topic.

- Kafka

cluster is a

combination of multiple Kafka nodes

- Producers send records to clusters

- store those records and then pass them to

consumers

- Each server node in the cluster is a

“broker,”

- which stores the data provided by the

producer until it is read by the consumer

- A Kafka cluster has exactly one broker that

acts as the Controller

- Zookeeper

- Management of the brokers in the cluster is

performed by Zookeeper.

- There may be multiple Zookeepers in a

cluster

- Keeps the state of the cluster (brokers,

topics, users)

- It manage and coordinate with the set of

Kafka broker.

- The Zookeeper service is mainly used to inform the Kafka producer and Kafka consumer about the presence of the number of broker in the environment or cluster.

- It also keeps track of Kafka topics, partitions, offsets

- Consumer Group

- is a group of consumers

- Multiple consumers combined to share the

workload.

- dividing a piece of large task among

multiple individuals.

- There can be multiple consumer groups

subscribing to the same or different topics.

- Two or more consumers belonging to the same

consumer group do not receive the common message.

- They always receive a different message

because the offset pointer moves to the next number once the message is

consumed by any of the consumers in that consumer group.

- a single consumer read all messages from a single topic. A single consumer will read messages from a number of Kafka topics , generally a consumer will be configured in a group of consumers

- multiple Consumer Groups reading from the same topic.

Each Consumer Group will maintain its own set of offsets and receive

messages independently from other consumer groups on the same topic. To

say it simply, a message received by Consumer Group 1 will also be

received by Consumer Group 2. Within a single consumer group, a consumer

will not receive the same messages as other consumers in the same consumer

group.

- Push

- Consumer can be overwhelmed by flooded

message by producer

- Difficulties dealing with diverse

consumers.

- Pull

- Consumer can pull the record as per

capacity from broker

- Batching is created for pulling the data as

per consumer

- Kafka can pull the batch on interval of time fix or the required byte size

- Kafka services

- Kafka Broker

- Kafka consumer

- Kafka Producer,

- Zookeeper

- Kafka Api

- Producer API: used to publish a stream of

records to a Kafka topic.

- Consumer API: used to subscribe to topics

and process their streams of records.

- Streams API: enables applications to behave

as stream processors, which take in an input stream from topic(s) and

transform it to an output stream which goes into different output

topic(s).

- Connector API: allows users to seamlessly automate the addition of another application or data system to their current Kafka topics.

Kafka: Queue and Publish-Subscriber:

Kafka: Queue and Publish-Subscriber

- https://www.cloudkarafka.com/blog/part1-kafka-for-beginners-what-is-apache-kafka.html

- https://kafka.apache.org/documentation/

- https://kafka.apache.org/quickstart

- https://www.educba.com/kafka-architecture/?source=leftnav